We’re campaigning for the reforms of laws relating to online abuse and robust regulation of the tech platforms perpetrators use, so those responsible are held accountable.

Updates

- April 16, 2024The Ministry of Justice has today (16th April 2024) announced that individuals who create sexually explicit deepfakes will face prosecution under a ne...

- April 12, 2024A new survey of young people has found that gaps in schools’ education are driving them towards porn and social media for information about sex and re...

- June 30, 2023Today (30th June 2023) the government has announced that it will amend the Online Safety Bill to require Ofcom to publish guidance for tech companies ...

DOWNLOADS

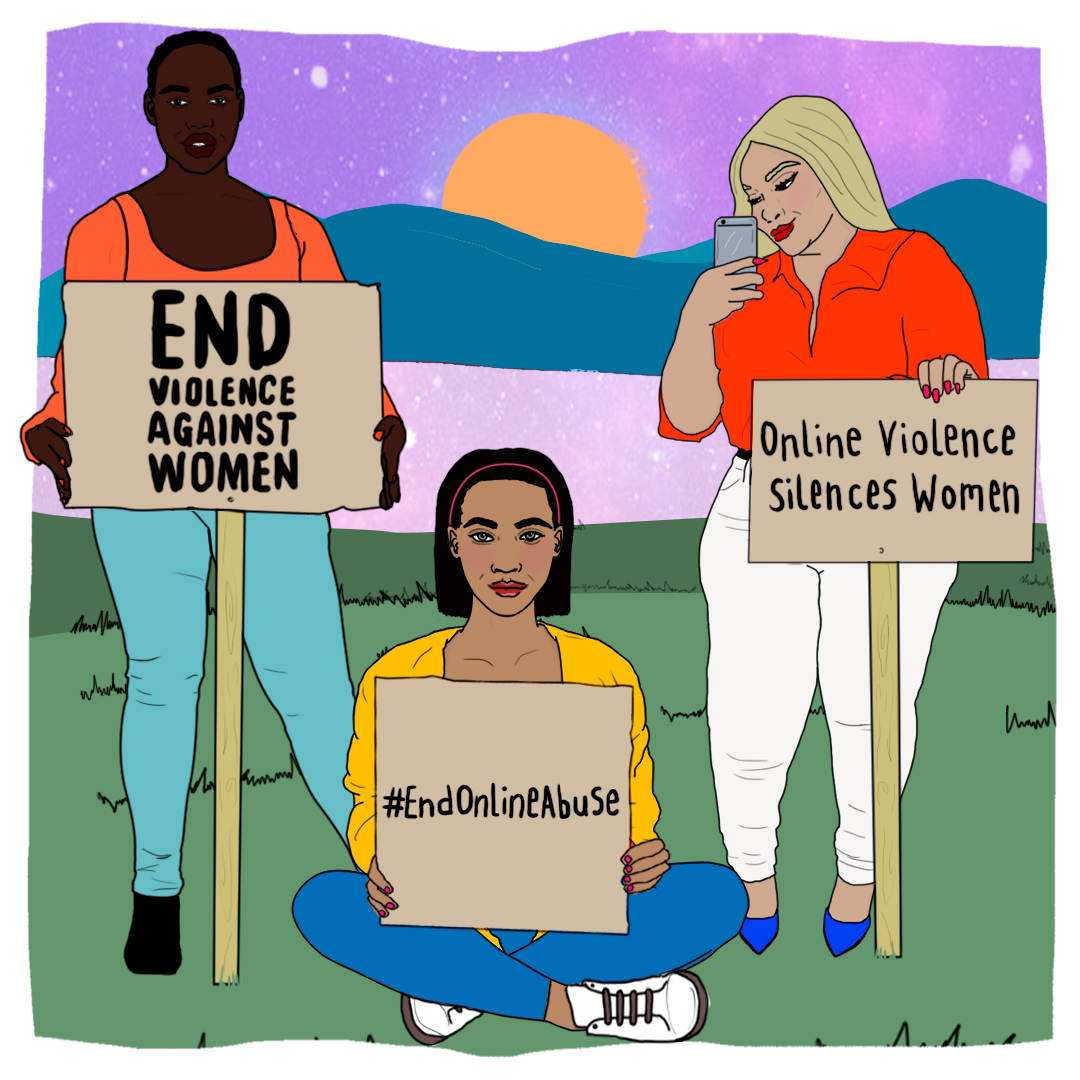

Women are 27 times more likely than men to be harassed online

1 in 5 women have experienced online harassment or abuse

Black women are 84% more likely to receive abusive or problematic tweets than white women

Online abuse includes cyberstalking, sexual harassment, grooming for exploitation or abuse, image-based sexual abuse (so called ‘revenge porn’, upskirting, fakeporn, sexual extortion and videos of sexual assaults and rapes), rape threats, doxxing of women’s personal information, tech abuse in intimate partnerships, and much more. This abuse can have a long-lasting, devastating impact on survivors.

We should all be able to socialise, work, learn, get involved in activism or join a community online, free from the threat of abuse. But women and girls’ rights and freedoms are being restricted online, as we self-censor, avoid certain platfirms or come offline to avoid harm. And this issue is only worsening as our online world becomes ever more interwoven in our daily lives.

A problem of this magnitude must be named and tackled. We believe that with the Online Safety Bill, the government has a once in a generation opportunity to address violence against women and girls by requiring social media companies to take action to prevent and end abuse on their platforms, and by requiring tech companies and the regulator to look at the relationship between perpetrators and the platforms they use and create a system of accountability. But currently, women and girls are being left out of this law.

Tell the government to #EndOnlineAbuse and include women and girls in the new online safety law.

Take actionOTHER CAMPAIGNS